Developer-Led Landscape: Complexity, Automation & A Future of Autonomous Development

Peak DevOps will cause consolidation and 500 corporate failures whose fallout seeds a 3rd wave of Software Industrialization driven by a multi-trillion dollar Autonomous Development market.

Complexity and agility has created a “Peak DevOps” moment which lowers developer job satisfaction in the face of sluggish, pre-inflation wage increases.

Subsequently, companies will face - and then unsuccessfully fight - higher costs to build and maintain software systems that under-deliver against unrelenting user expectations.

Peak DevOps will cause a pivotal transition for the developer industry.

Platform consolidation and startup over-capitalization prefaces failures of ~500 sub-$10M ARR developer companies, whose fallout will:

Seed a 3rd wave of software industrialization driven by Autonomous Software Development.

Autonomous Software Development would launch a multi-trillion dollar industry, mint a plethora of new super star engineering founders, and cause a seismic power shift that could supplant existing platforms provided by Microsoft, IBM, Gitlab, Atlassian, Oracle, Snowflake, Informatica, Tibco, Software AG, and Confluent.

In this article, I outline the nature of complexity in software development, how automation is applied in the software process, the potential for autonomous software development, introduce an autonomous development marketecture, and discuss the early vendors that make up this new landscape.

Previous developer-led landscape papers were analytical and backward looking. This paper discusses the nature of complexity, how automation has shaped the landscape, and does a first - imagines what a future holds in store for developers.

Previous Developer-Led Landscape Papers

Developer-Led Landscape: Some 2022 Predictions

Developer-Led Landscape: 2021 Edition

Developer-Led Landscape: Latency-Optimized Development

Developer-Led Landscape: 2021 Trends Foretell New Approaches To DevOps

Developer-Led Landscape: Cloud-Native Development

Developer-Led Landscape: The Original

About Dell Technologies Capital

We are investors, and if you are in a developer-related business, I’d love to connect.

We lead investments in disruptive, early-stage startups in enterprise and cloud infrastructure. Entrepreneurs partner with us for our deep domain expertise, company building experience, and unique access to the enterprise ecosystem.

We have 150 investments with 60 exits, 14 unicorns, and 9 IPOs. We invest ~$250M per year.

Also, many thanks to Riley Stutz, our phenomenal analyst, who helped on the research and material preparation.

1.0: The Supply Of Developer Talent Is Not Meeting The Demand For New Digital Services.

Generally, over the past 20 years, the rate of growth for new software development talent has been linear while data growth has been non-linear.

Data, and the rate of data growth, is the strongest reflection for the demand for new digital services. If the data exists, then there is demand for its consumption. More data leads to more demand. If not, we’d discard the data.

Slashdata, a developer research organization, has a refined study that tracks how many developers exist. Their definition includes anyone that identifies as participating in the development process, so it’s an expansive view that includes coders, team leaders, creatives, hobbyists, and students.

In a recent GitHub Octoverse survey, they found that 61% of the people with a GitHub account were developers and 39% were those that participated in the ecosystem. That metric, applied to Slashdata’s 31M developer number would imply roughly 19M global developers, and some portion of that would be those paid for their talents.

Regardless, the 59% rate of data growth far outpaces the 13% entrance of new developers.

1.1: Over Time, Developers Build Systems That Consume More Data Per Developer.

Are developers more productive as a result?

There is an interesting concept in economics called the Jevons Paradox. It occurs when technological progress increases the efficiency of which a resource is consumed, but that the consumption of that resource rises due to increasing demand. It oddly implies that the cause of increased demand is correlated to our efforts to increase efficiency.

As the data per developer increases, Jevons Paradox implies that the demand for that data increases equivalently.

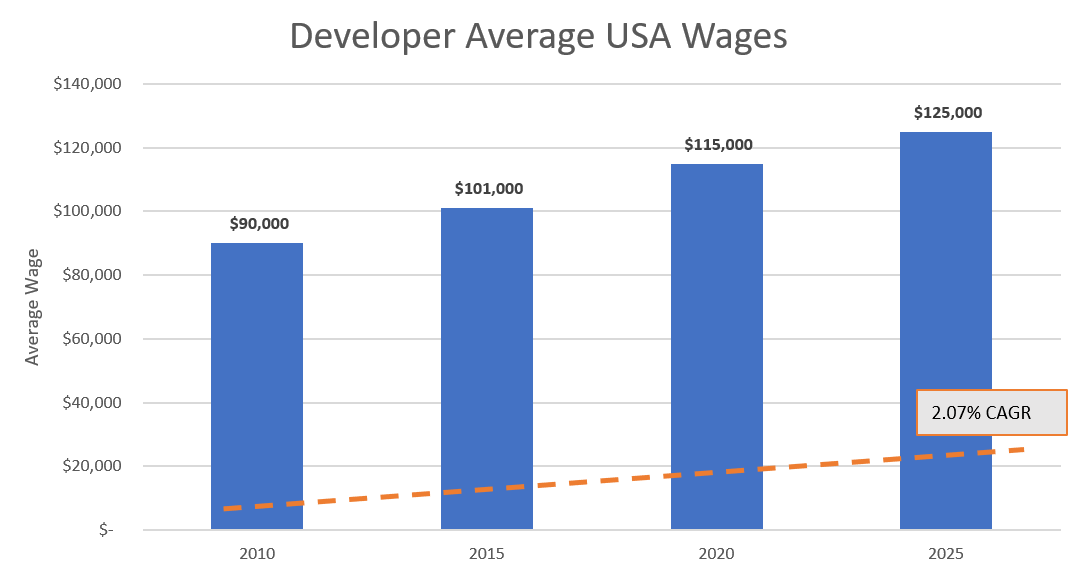

1.2: Wage Increases For Software Developers Are Not Creating A Wealth Effect That Can Bring Supply In Balance With Demand.

The average wage growth for software developers has barely matched pace with inflation. In spite of the riches that people hear about for top tier engineering positions within Silicon Valley startups, the reality is those positions reflect a small portion of the global developer population. And the average developer’s wages haven’t yet increased at a pace that would attract larger number of entrants of people, who perhaps don’t love engineering, but would enter the field just for its attractive wage.

This data doesn’t take into account an increase in developers who are consultants and that switch often creates an event where significant wage increases can occur.

It’s estimated that less than 10% of the global developer population would identify as a consultant.

1.3: Developer Growth Is Limited By Generational Factors And Short Careers.

There are other generational factors that limit the growth of talent within our industry including STEM education, gender discrimination and fair pay, societal pressures, and the strain felt from seasoned technologists who maintain near continuous upskilling to keep pace with the change of technology.

1.3.1: Bottoms Up Analysis Suggests That Rate Of Growth Is 1.5M New Professional Developers Per Year.

The rate of growth of developers may be much less than Slashdata speculates is happening. About 400,000 computer science students graduate each year globally. There is probably another 400,000 students from computer engineering, electrical engineering, mathematics, and social sciences (you’d be surprised at how many psychology majors are developers!) that transition into development. Coding boot camps produce another 100,000 graduates. And assuming self-taught and hobbyists show up, you can estimate that new paid developers is 1M —> 1.5M per year.

1.3.2: Developer Attrition And Burnout Reduce Professional Developer Population By 200K.

Due to cultural conflicts, the constant pace of change, and continuous upskilling required, many developers experience burnout or shift careers. What is the average career of a developer?

Assuming that people of all age groups are willing to participate in surveys and that there is no data bias, if StackOverflow represents the general developer population, then it looks like 30% of professional developers are older than 35 years.

This would suggest a career lifespan of 15-20 years, which implies the attrition rate of professional developers is ~200K. Nnamdi, a partner at LSVP, has confirmed this in an alternative way in his article, “There Will Never Be Enough Developers” where he says that 30% of engineering and computer science graduates drop out of their field by mid-career. This is attributed to the constant re-training and skill up leveling required.

This rate of attrition certainly poses challenges for the world to increase and sustain the base of developers that exist.

For the rate of growth of developers to reliably exceed 1.5M / year, the attrition rates need to decline and the number of new paid developer positions must exceed 2M. The labor statistics data don’t currently support that this dynamic is playing out.

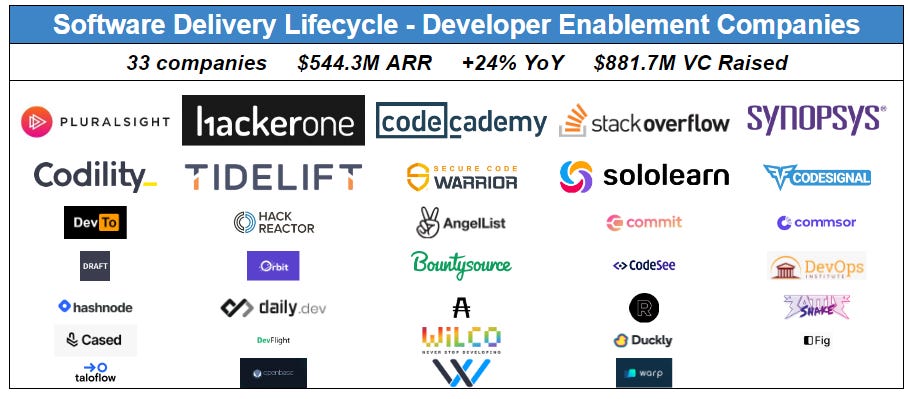

1.4: Developer Enablement Is A $500M Ecosystem That Engages And Monetizes Developer Communities To Improve Skills, Propagate Best Practices, Solve Technical Challenges, And Facilitate Job Searches.

A consequence, (artifact?), (benefit?) of the limited talent in software construction are the support ecosystem vendors that emerge to help fill the void by helping software talent improve their hard and soft skills.

Developer enablement has 33 companies that publish a variety of services generating approximately $500M / year and growing 32% from the past couple years. This excludes the short and long tail of technology consulting and training businesses that is virtually impossible to size, but arguably well over $10B in annual revenues.

1.4.1: Developer Relations (DevRel) Has Emerged As An Extension Of Marketing, Support, and Product Organizations Employing 5000 To Further Enable Developer Users And Buyers.

In the 90s, when I started my career at BEA, I was titled “Technology Evangelist”, but I was a member of a small group in marketing that was tasked with educating the market on the importance and usage of emerging technologies of strategic interest to BEA.

BEA was forward thinking in their use of dollars, aggressively adding marketing programs that were targeted to further enable developers. They did this at a time where developer monetization was perceived to be non-existent. However, BEA’s success was tied to Java and, more importantly, Java EE becoming global standards ahead of .NET. Funding promotion and programs that drove adoption of technology in conjunction with product education was a strategic product and revenue driver for BEA.

Now, DevRel is becoming standard practice for enabling developers for APIs, SDKs, or platforms. DevRel plays a role whether your dev user base is internal or external to your organization. Most organizations invest as a part of their marketing budget, but that is a mistake as connecting developer engagement to revenue is virtually impossible. Fundamentally, DevRel not only acquires and engages developers, but enables and extends them. DevRel is a customer success and support function, and are fundamental to a COGS reduction, GM improvement, and driver of NRR.

1.5: A Postmodern Cycle Where Inflation Is A Bigger Risk Than Deflation Will Cause Energy And Labor Supplies To Become More Expensive Driving Higher R&D / COGS.

While historical wage growth numbers are not rising fast enough to be attractive for new developers to rapidly enter the industry, the inflationary period that we are entering will drive up wages and cause labor shortages (see Goldman Sachs: The Postmodern Cycle).

While the increased wage costs may drive more people to become developers, the bigger impact is the bottom line impact to companies producing software.

As wage costs increase, companies will face higher R&D and COGS in order to build and maintain software systems that could under-deliver against intense user expectations.

2.0: Two Decades of Increasing Software System Complexity Has Limited Developer Productivity Gains.

If software was only as simple as this Python snippet:

print("Hello, World.")Today, it is possible to build an equivalent 1999 system in a fraction of the effort required in 1999.

Yet, despite many advancements, software teams are arguably less productive in “real-effort” terms than they were in 1999.

Increased complexity that comes from software process and architecture is a productivity drag that reduces the growth of “real-effort”.

2.0.1: Complexity Arises From Scaling, Toolchains, Technology, Collaboration, and Architecture.

Scaling is hard. The number of projects, managers, teams, devices, head count, tools, and requirements generally keep increasing leading to unintended layers of complexity.

Let’s explore the nature of complexity in software systems.

2.1: The Rise Of Open Source Dramatically Improved Reusability But Increased Complexity Through House-Of-Cards Transitive Graphs.

In 2000, 85% of a system was coded, and 15% was composed through infrastructure, library, and module reuse.

In 2022, it’s a composition world where 85% of a system comes from package reuse, and 15% is from custom logic.

Reusability is a double edged sword. On the one hand, a developer gets to avoid having to reinvent a task by using a module that specializes in that task.

On the other hand, as reusability increases, it turns into layers of transitive reuse. Modules at higher levels reuse modules from lower levels. Sometimes multiple modules that you reuse share other modules in a similar fashion. The relationship between modules and their reuse is a transitive graph. Organizations (and developers) need to understand the full transitive graph of their dependencies, their dependencies dependencies, and so forth all the way to the root code. They must understand these issues for performance and security reasons, as those dependencies could be a bottleneck or attack vector.

As reuse grows, the complexity of the transitive graph tends to grow logarithmically. Developers have traded off one form of code complexity for reuse complexity.

2.1.1: Build Automation Systems Facilitate Managing Dependency Complexity - To A Small Degree - Leading To Dozens Which Dev Teams Must Choose From.

Build systems use automation through the form of scripting or other programs to turn source code into runnable binaries. In order to appropriately create the right binary, many build systems have to maintain an internal structure of the various dependencies. As a result, making use of the build systems imposes some reasonable order and structure onto the transitive dependency graph.

As such, for as much variation that exists in programming languages, there can be as much variation in build automation systems. Some build systems are pinned to the unique structure of a single programming language while others work across languages.

2.2: Paradigm Shift: The Move From Centralized To Distributed Version Control Increases Performance And Scale Of Collaboration While Increasing Threshold Of What Constitutes ‘Change Control Knowledgeable’.

The wave of decentralized version control began when git was launched in 2005 by Linux Torvalds. The key difference between centralized and decentralized version control is whether there is a single copy of the database (centralized) vs. local copies provided for every developer (decentralized). The decentralization required any contributing developer to learn and maintain skills around how to make changes locally vs. remotely while learning advanced techniques for synchronizing changes between replicas.

This subtle difference has significantly increased the complexity involved with a developer contributing to a project. The original git command that is widely used was originally intended to be a low-level layers that other, more user-friendly programs would use as a middleman. It was supposed to similar to Unix pipes and follow Unix design conventions of doing one thing and doing it well. But as time went on, early developers became familiar with the plumbing and how to combine low-level commands to achieve high-level objectives, the steering group around git design decided that what they had provided was sufficient.

This complexity is out there for many to see. There are close to 140K active questions about the appropriate way to use git on Stack Overflow.

2.3: Paradigm Shift: The Transition From Waterfall To Agile Over Two Decades Has Created A Cultural Desire For Dev Teams To Ship Faster.

In the 1990s, Waterfall was the dominant paradigm, a practice that scheduled releases around a significant version with baskets of features, typically planned months or quarters in advance. With the pace of markets shifting at increasing velocity, Waterfall didn’t provide an approach that allowed a team to adapt to change.

Agile, an iterative practice of software development that embraces change as it happens, has been broadly adopted in the 20 years since its launch. Organizations cite many reasons for adopting Agile practices including enhanced ability to manage changing priorities, accelerating software delivery, improvement with business and IT alignment, enhanced software quality, better project visibility, and increased software maintainability.

This has led to a basic belief that teams that can ship more frequently while quickly incorporating feedback from end users are more productive with higher end user satisfaction.

However, taken to its natural conclusion, agile encourages teams to consider approaches that have them continuously ship changes while addressing massive scale end user satisfaction 24/7. This ultimately backfires creating an unsustainable software system due to fire hose of inputs, over stimulated teams, talent burnout that stems from the inability to gain a sense of “done” / “achievement”.

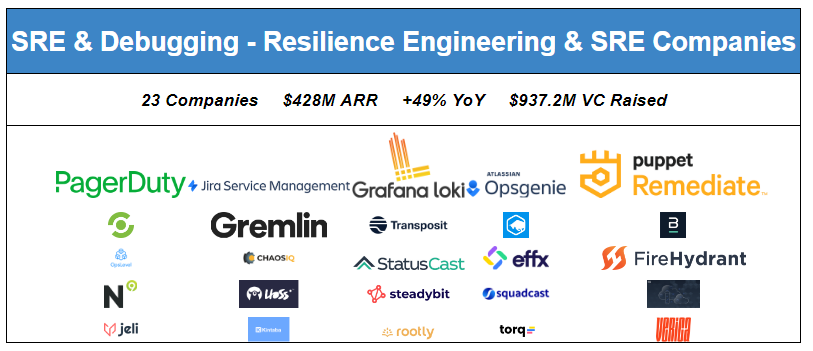

2.3.1: Site Reliability Engineering Addresses Agile Shortcomings With Specialists In Quality Of Experience And Availability And Is A Fast Growing $428M ARR Market.

Site Reliability Engineering, and in many ways, the mega-billions Customer Success markets have emerged, in part, to address the shortcomings of agile by creating specialists that culturally embraces (and builds processes / buys tools) for maintaining the software-end user link at the pace of accelerating change.

2.4: API-First Pervasiveness Has Created 1 Trillion Programmable Endpoints And An API Ecosystem That Is $12B ARR, +24% YOY, Larger Than GDP Of 1/3 Of 190 Global Countries.

APIs are a way for developers to create a user experience that is universal.

API contracts are standardized and free from user hardware, operating system, or platform constraints.

This has led to the emergence of the API economy, the rich entanglement of business that engages across APIs. Developers build intelligent abstractions into APIs, gain adoption of the API, which encourages other complex systems to be exposed as APIs.

We are approaching 1 trillion programmable endpoints … such significant volume that developers face digital rain forest of density (ie, complexity!) that they must sort through to select and make appropriate use of APIs.

APIs act as third-party dependencies. Much like the transitive graphs of open source components, API dependency graphs create a supply chain requiring software development and operations teams to become skilled in the upstream (API consumers) and downstream (API dependencies) management of relationships.

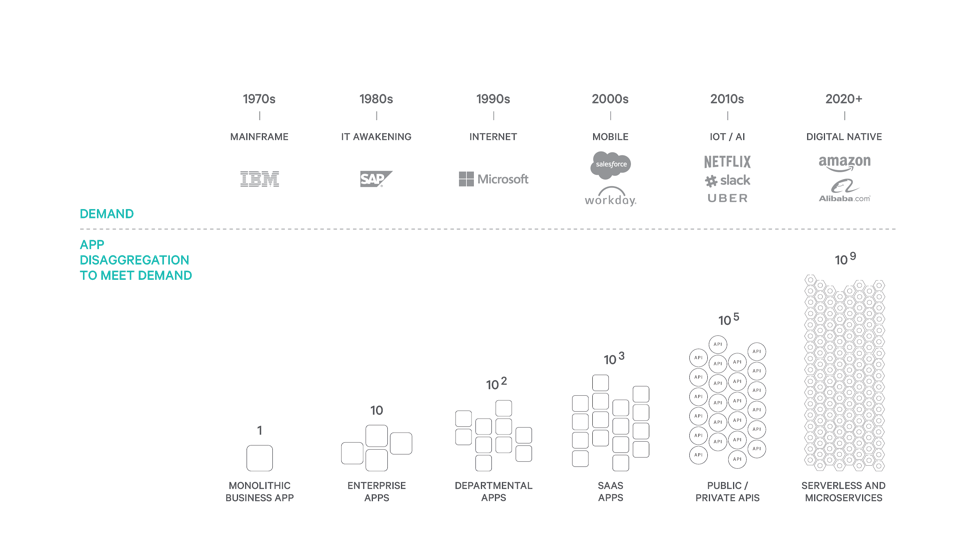

Further, as consumer demand for applications increase, applications disaggregate their architectures in order to create structured services that can fulfill the demand. Much the way that a single developer consumes more data per developer over time, so will a unit of logic. However, as that unit of logic increases in size and data consumption, it becomes more difficult to manage. Development teams naturally look to break the logic into multiple sub-components to create clearer boundaries of responsibility, but to also make a single unit of logic more maintainable by a smaller set of developers.

With most every unit of logic increasingly being programmable itself, this is an API abstraction that is created. The natural tendency to continue breaking large systems into smaller ones creates logarithmic increases in the number of programmable endpoints.

This, married with billions of IOT devices on ramping each year creates a rain forest of APIs that developers must sort through.

2.5: Diversity Of Devices Drives A Broad Spectrum Of User Experiences That Create A Web Of Interoperability And Portability Challenges.

The breath of interfaces has led to a variety of app, browser, device, and terminal implementations which make interoperability and app portability an increasingly complex Web of skills that must be honed by developers.

This complexity must be embraced by front-end developers as consistent behavior of a system across devices and browsers creates a predictable User Experience (UX) needed to create user satisfaction.

Protocols underlying the Web, such as HTTP and TCP, offer a network substrate which is a form of universal communication interoperability, but are unable to accommodate properties that make app presentation interoperability simpler.

As the Web is constantly undergoing change by supporting different forms of text, user experience, voice, video, and alternative forms of user input / output, higher level Web standards that must be implemented by an operating system or browser engine struggle to keep their support current and on-par with their rivals.

One representation of this is the Spellbook of Modern Web Development, a compendium of the technologies that a front-end developer must learn to create interoperable user experiences.

2.6: Fragmented Tooling, Infrastructure, and Platform Drag Productivity As Developers Spend Increasingly More Time Evaluating And Adopting Technologies Which May Not Exist In Five Years.

Choice in developer tooling, infrastructure, and platforms is the richest it has ever been.

Generally, competition is indicative of a healthy, robust market and a trade of goods.

However, too much choice creates complexity and can lead to lower productivity. Development teams frequently must re-assess the tooling landscape, compare alternatives, consider the impact of adopting new tools, and too continually study in order to get maximum effectiveness out of the tools that are adopted.

The number of companies in the developer-led landscape has grown nearly 15% annual since 2009. But the rate of growth has increased to 19% over the past 3 years since we began publishing it annually.

The choice that developers face in choosing their technology and process stack has been increasing at an increasing rate.

We create a new specialization in the landscape when we see multiple companies in the same category that specialize (and brand themselves appropriately) in a more narrow fashion than the rest of their rivals. When three or more companies brand similarly, we group them into a specialization and give it a label. The number of specializations changing over time is another reflection of tooling challenges facing developers.

Dev tooling and platform companies need to cross over a $10M ARR threshold for them to be independently viable without VC infusions. Few of these new entrants have crossed over $1M ARR and it’s possible that a large number of the new entrants will not still be viable in 5 years.

A micro-illustration of this trend has been the shift in server-side technologies. In 2002, developers were predominantly left with two platforms for which they could depend: Java Enterprise Edition (JavaEE) which was supported by 100s of software vendors, and Microsoft .NET. 80% of server-side development was initiated by selecting one of these two general-purpose platforms. There were other technologies available such as PHP, but were adopted for fringe use cases at the time.

2.6.1: A Developer Touches An Average Of 15 Tools To Deploy A Code Fix.

As the developer tool chain has increased in sophistication, it has also created complexity. For developers, this productivity is a productivity sink. For infrastructure and DevOps engineers, they devote time to keep these tools running and integrated. For businesses, this has transitioned into a security issue as interruptions to the software supply chain interrupt the business.

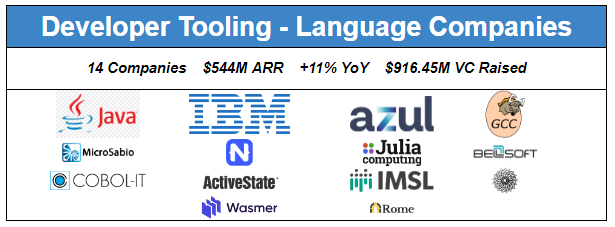

2.6.2: 100s Of Programming Languages Give Developers Choice But Fragment The Market And Lead To Low ARR Language Companies.

Today, the frameworks and platforms available for developing APIs, micro services, and other back-end systems are practically innumerable.

This is a small example of fragmentation that happens. In the case of languages, choice is great for developers, but the broad spectrum available to choose from is a form of complexity as developers have to study, learn and test a variety of technologies before they make a selection.

It’s increasingly difficult for vendors to monetize around programming languages as a result of open source and this fragmentation.

While the choice of programming languages, frameworks, and platforms has increased dramatically, so has the complexity associated with choosing the right platform for a project, training the organizational talent pool to make effective use of a new platform, along with recruiting new hires which are comfortable / desire to be part of the project’s technology sphere.

2.7: Organizational Dynamics Limit Productivity By Expanding And Contracting Responsibilities Across Dev Teams And Over A Dozen Other Involved Teams.

Any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization's communication structure.

Melvin E. Conway

Conway’s Law reasons for a software module to function, multiple authors must communicate frequently with each other. Therefore, the interface structure of a system will reflect the social boundaries of the group that produced it, making communication more difficult as the software module increases its complexity.

Taken more broadly, as software systems grow in scope and function the resulting organization will create additional units of communication (ie, additional teams that perform a single function) across which everyone must communicate.

One way to measure this organizational complexity is to look at the number of different job titles that exist within and around development organizations.

Patrick Debois of Snyk captures this as a function of changing titles over time while describing the role of DevOps as anyone chartered with overcoming the friction created by silo with everyone else being an engineer.

2.7.1 Blurred Lines Between Work And Home Creates Engineer Burnout Conditions That Too Often Spiral.

The media speaks of the "Great Resignation” from knowledge workers, many of which are software developers driven by the effects of COVID.

This isn’t a resignation as much as a set of musical chairs. Developers are not quitting their jobs to become full time musicians. Rather, they are quitting their jobs for better (paying, culture, project, role) jobs.

A survey by Haystack asked developers what are the reasons that they quit jobs and 83% said burnout was a key factor. While flipping a job to obtain better conditions is the outcome of the Great Resignation, the instigation is generally people being dissatisfied in their current environment.

Developers burnout for a variety of reasons:

Unrealistic project plans (whether self-determined or bestowed from above).

Remote working environments creating unhealthy boundaries between home and work. The rise of ChatOps has enabled anyone, anywhere, anytime to collaborate as a collective. While this instant discussions breaks down many barriers and can be highly efficient, it’s never off and can be overwhelming. Individuals who are unable to set healthy boundaries between being on/off from their work invite eventual frustration with the nature of their work.

The hamster wheel effect of doing a great job leads to getting even more work.

Burnout driven by unrealistic expectations, prolonged intensive communication, and the never-ending cycle of obtaining more work is a form of complexity that our industry must address.

2.8: Configuration Is A Cross-Cutting Problem That Amplifies Complexity As The Source For Many Massive Failures.

If the final product of software engineering is to produce an artifact, then configuration is the concern that alters the definition and behavior of the artifact.

Configuration is inherently a problem of cross-cutting concerns of data, policy, schema, and validation.

In other words, configuration is everywhere and it subsequently impacts all software.

Software systems are built through composition and inheritance, where top-level software systems are able to redefine and override the behaviors from the lower levels.

This principle doesn’t apply to configuration. Configuration is not strictly inheritable, so each configuration from every new component turns into a combinatorial problem since two configurations from lower level components do not have a strict order in how they “combine” to work together.

Things get complex very fast as a system with 10 open source libraries has >3M strict orderings on how those configurations could be combined. With each configuration having sometimes 100s of properties each, the potential ordering and conflict scenarios are astronomical.

It is not surprising that many, if not the majority, of big outages can be attributed to configuration issues.

I am particularly excited by the work being done by CUE Labs around cuelang. CUE takes a compositional approach to configuration similar to how programming languages inherit from classes and this eliminates the combinatorial issue of configuration ordering.

An aspect-oriented or compositional approach to configuration prevents the complexities of configuration. By making composition commutative, associative, and idempotent, the combinatorial issue imposed by inheritance vanishes. Additionally, it makes it possible to reason over values and configuration as a whole.

Some people say that we have Software Engineering under control and that we will see more incremental improvements there, but Configuration Engineering is still a hot mess and will see the next wave of gains (based on what they see with CUE).

2.9: Value Stream Management Is A $277M ARR +10% YOY Market That Empowers Delivery Organizations To Measure, Mitigate, and Monitor Complexity.

If you can’t beat them, join them.

— 1930s Proverb

While many software leaders’ default approach is to consider tactics for eliminating complexity, to some degree it will always exist.

As software delivery organizations grow projects, end users, and philosophies, complexity becomes inherent (and sometimes metastasizes).

Large organizations then undertake varying approaches to monitor, observe, and assess the nature of complexity that is affecting their organization.

Value Stream Management is a $277M ARR +10% market dedicated to helping program management, governance, and software delivery leaders wrangle complexity.

While growing slower than the rest of the developer-led landscape, this market is a mixture of the haves and have nots, with a few companies growing significantly faster than a couple others.

Value Streams, at least in VSM parlance, are documented traces of software development processes. The trace captures who, where, how, when, which tools, and the expected outcomes of the software delivery process. Value Stream Management measures, alter, and improve those processes to improve outcomes, desires of software talent, or to assess how processes impact customers.

One way to think of Value Streams is to compare them to cycle times measurements promoted by Engineering Efficiency products. Cycle time is a measure of the time it takes from code commits until a release in production. A piece of code is tactical and doesn’t necessarily reflect a business impact in the form of a feature alteration. On the other hand, Value Streams measure “Flow Cycle Time” which is the time between ideation and release, which requires integrating information across numerous disparate tools that often exist across multiple teams (since capabilities are spread across different groups).

While VSM sounds like an organizational, cultural, and people monitoring, their roots are real-time process mapping for the discovery and flow of how people and tools interact with each other. This is a difficult software integration challenge, which is hampered when organizations have cross team / product boundaries that must be traversed.

While VSM doesn’t undermine the iterative release empowerment that comes from 2-4 pizza, autonomous, independently operating software teams. Rather, VSM, gives essential insights for program managers to better align those tip-of-the-spear units with broader corporate objectives and the many other units moving in their own direction.

One of the leaders in the VSM space is Tasktop, which interestingly began as an Eclipse IDE plug-in for developers to switch their code context quickly. Switching context meant changing the view of the code, but also the related view of the other tools used by the developer: issue management, design tools, CI, and so on. Tasktop extended their IDE expertise and created the VSM category by building a service bus that integrated and synchronized disparate tools. This led them to integrating tools across teams, mining that information to create intelligence, and then providing definition and measurement of value streams — ie, measuring the flow of value in the creation and management of digital assets.

Tasktop's goal is to provide an intelligence platform that can plug into the various tools across the software development lifecycle to help technology leaders understand what they are and aren't doing well in their software delivery, so they can iteratively improve their performance and better tie those operational improvements to their business outcomes.

The451 Analyst Report - 3/29/22

In discussing with Mik Kersten, Tasktop’s CEO and Founder, the past two decades have led to more specialization of the functions within the developer construction process. Each function has specialized tooling, and it’s generally disconnected. This is in contrast to the 90s where development teams leveraged a fully integrated tool suite which could more easily be mined to determine what corporate value streams emerged. The specialization trend causes tool decentralization which makes the scale of integration more challenging.

Tasktop was recently acquired by Planview, which offers solutions to help measure the strategic and business impact of changes. The combination will allow CEOs, CIOs, CDOs, and CFOs to have a common, consistent measurement of the impact of change and complexity across physical and digital systems.

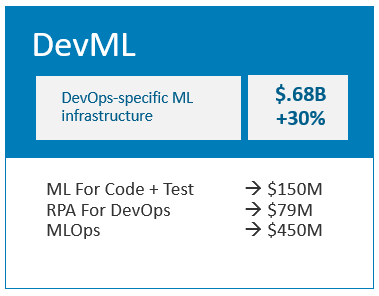

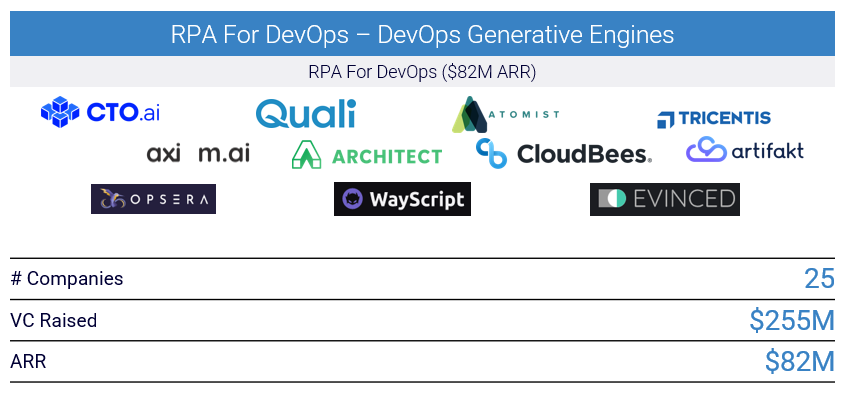

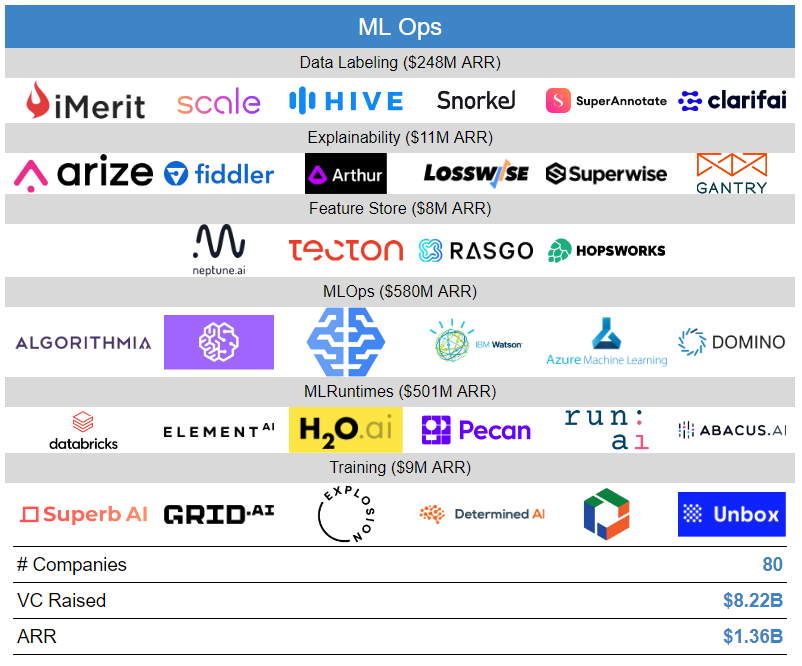

3.0: DevOps Automation, Which Abstracts And Shifts Complexity, Improves Developer Productivity And Underpins $8.9B ARR, 403 Companies, And 21% Growth.

With time and pressure, talented engineers have brilliant insights which naturally make easy many DevOps things that were once hard.

Julia Evans, a software developer in Montreal, compiled a list itemized by her colleagues: SSL, concurrency, cross-compilation, configuring cloud infrastructure, setting up a dev environment, rich Web sites with back ends, training neural networks, deploying a Web site, running a database, image recognition, cryptography with opinionated crypto primitives, live updates to Web pages, building video games, NLP, and so on.

Over time, many things that were a struggle become easier.

But that doesn’t mean that developers are more productive.

3.1: Automation Is The Most Common Approach For Addressing Complexity Facing Development Teams And 403 Vendors Generate $8.948B ARR.

Automation is the use of largely automatic equipment in a system of manufacturing or other production process.

Oxford Dictionary

Automation applied to developer tasks materializes in many forms.

Automation (usually) has a large and quantifiable value proposition tied to the productivity of developers and their teams. Subsequently, DevOps automation plays have historically been attractive places for entrepreneurs and investors to make bets.

Across the developer-led landscape, there are 403 companies whose primary product is a form of automation for development that generates $8.948B ARR growing over 20% annually.

Let’s discuss the various types of automation that has been introduced to make developers more productive.

This list may not be exhaustive.

3.2: Automation – Programming Languages, Decorators, and Build Systems Evolve By Introducing Architectural Abstractions That Get More Done With Fewer Lines Of Code.

Over time, programming languages introduce syntax that make hard things simpler.

Originally, programming language innovation was typically around improving the nature of how low level primitives could move or operate against data, which were essential to any kind of workload.

Over time, some programming languages have specialized around types of workloads. Java was ideal for server run times. Python for data science. Julia for visualizations. JavaScript for cross-browser portability.

Increasingly, many languages (or extensions to those languages through decorators or build plug-ins) extend their syntax to represent architectural building blocks. Take, for example, the Ballerina programming language, who has a syntax to represent a Web-hosted API baked into the language. Ballerina programmers can write a “service” which, when compiled, will be built into an executable whose runtime will execute an over-the-network accessible API. The developer learns a narrow set of syntax to address complex networking protocols, data transformation from packets into code structures, load balancing and fault tolerance of the service, or how asynchronous notifications will be addressed.

service "Albums" on new grpc:Listener(port) {

remote function getAlbum(string id) returns Album|error {

// do some stuff

}

remote function addAlbum(Album album) returns Album|error {

// do some stuff

}

}Perhaps the most widely adopted language abstraction isn’t a language at all. The Spring Framework, which was originally sponsored by a startup, but then by Pivotal and now VMWare, uses Inversion of Control and code decorators to instruct compilers and runtimes how to inject behaviors into and around code. Spring is the most dominant framework for the Java community and has well over 1M developers that leverage it.

package com.example.demo;

@SpringBootApplication

@RestController

public class DemoApplication {

public static void main(String[] args) {

SpringApplication.run(DemoApplication.class, args);

}

@GetMapping("/hello")

public String hello(

@RequestParam(value = "name",

defaultValue = "World") String name) {

return String.format("Hello %s!", name);

}

}3.2.1: Substantial Businesses Emerge From Programming Disruptions And Create Large, Long-Lived Software Companies.

It is easy to dismiss programming language as constructs for geeks.

However, the right language innovation strikes a special balance between abstraction and configurable power. When that balance is discovered, developers adopt that construct and this opens the door for substantial platform businesses to emerge.

3.3: Automation – Scripting Is The Backbone Of Administration, An Immeasurable Market, And A Blackhole Of Creative Technical Debt.

Scripts are a form of code that automate tasks that were previously individually executed. They are generally interpreted languages rather than compiled.

Well known scripting languages include PowerShell, Perl, AWK, and Bash.

Scripts form the backbone of most systems by offering simple ways to build automation logic around standard interfaces within that system.

There are more than 4000 known scripting languages. Many languages are system-specific, but many have also been ported to work across many systems.

Scripting languages come in different types: glue languages (PowerShell), editor languages (VIM macros), job control languages (Unix shell), GUI scripting (some RPA vendors implement these), application languages (Emacs Lisp), and extension languages (JavaScript when it’s purpose was browser languages).

Scripting is very simple to get started, but often lacks many of the constructs necessary to make maintainability of the logic simpler and often has syntax which makes deciphering the script’s intent challenging.

Subsequently, a lot of active automation built as scripts are costly to debug and fix when they break. No one really knows if scripting is so popular because of it’s approachability or whether script-slinging admins are happy about their job security as the their internal script library grows to a level that others cannot maintain them (ok, a little humor).

There has been limited commercialization around scripting and scripting languages.

3.4: Automation – Scaffolding, SDKs, and Frameworks Generate $808M ARR Per Year.

For as long as programming languages have existed, developers create frameworks and Software Development Kits (SDKs) that, in turn, simplify the implementation of patterns that are frequently needed.

Most SDKs and Frameworks are bound to a particular programming language. This poses challenges in how to build adoption, community, and commercial interests around a specific framework.

There are 78 commercial companies that generate $808M ARR which are labeled as SDKs or Frameworks in the developer landscape. Leaders in this space include Microsoft, Progress Software, Tibco, QT Group, and Flexera Software. These are some odd names, but they all have specialty SDKs that dominate a niche segment and are able to generate >$50M ARR / year.

3.5: Automation – Model-Driven Architecture Enables System Design Through Graphical Domain Modeling And Associated Tools Generate $40M ARR In 2022.

Model-driven engineering and architecture is a software design methodology that leverages a domain model in order to infer system behavior. A resulting framework can interpret that behavior to automate the creation of a system that reflects that behavior. In this situation, the developer is a domain model engineer and then leverages frameworks that create generative systems which are then operationalized.

There are a variety of historical tools that facilitate model-driven architecture including such as Sparx Systems, Eclipse Modeling Framework, Compuware OptimalJ, IBM Rhapsody, and SAP PowerDesigner.

Generally, model-driven architecture and engineering is not widely used. Most systems were based upon the UML design language from the Object Management Group defined in the 1990s - a robust and descriptive language for describing models, but fell out of favor. If you want to capture the complexity of a program in a picture, you end up with a very complex picture. Many developers tend to feel that code is a better representation of a complex system rather than a model.

The relatively weak ARR seems to confirm this orientation.

3.5.1: Domain Modeling Has Numerous Derivatives With DSLs Acting As Launch Pads For DevOps-Defining Businesses.

Application Lifecycle Modeling (ALM), Business Process Model and Notation (BPMN), Business-Driven Development (BDD), Test-Driven Development (TDD), Domain-Driven Design (DDD), Domain-Specific Languages (DSL), and Story-Driven Modeling are all various forms of abstractions that extend pipeline constructs, workflow engines, or programming languages to include optimizations which create a specialized framework for a specific domain.

3.5.2: DSLs Define And Underpin Generation-Defining DevOps Companies.

HTML is the most well-known DSL. Used to define Web pages, it’s a DSL that is optimized for displaying documents in a Web browser. Documents in a browser have different interaction properties than those that are displayed in another viewer, such as a PDF window. There are so many behaviors that are encapsulated within the browser that HTML has undergone 5 major revisions over 30 years.

Puppet Labs, one of the few companies in the developer-led landscape with more than $100M ARR, had a cloud and server-based DSL used to define provisioning profiles. Puppet was acquired in Q2 2022 by Perforce for what was rumored to be a low revenue multiple.

Terraform, which has increasingly become the standard approach to automating infrastructure within any cloud, was built upon the Hashicorp Configuration Language DSL and is a key contributor to their $6.3B market cap.

DSLs are within spreadsheets, gaming engines, meta programming environments, databases (SQL?!?), hardware description languages, network description languages, and rules engines. Pretty much the entirety of the world’s most popular software.

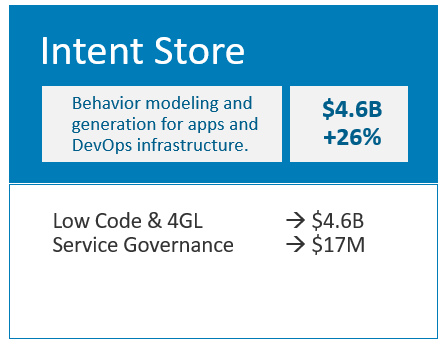

3.6: Automation – Low Code Platforms Enable Rapid Development Of Applications Using Opinionated Frameworks And Have Exploded Over The Past Decade And Generate $4.6B ARR Across A Dizzying 110 Companies.

Low Code platforms provide a development environment that can create applications, data constructs, workflows, or other digital services using model-driven design, automatic code generation, and visual programming.

Low Code systems are able to more rapidly build certain classes of digital services by creating an opinionated abstraction tailored to a specific type of asset being created. Whereas programming languages let you build anything that your creativity can muster, Low Code systems constrain the problem domain to a certain type of asset so that automation can be applied to more rapidly and easily build the asset.

Low Code systems also lower the skill set for what it takes to create applications and a common benefit is that a wider range of people can contribute to the application's development.

3.6.1: Low Code Systems Multiply The Developer Population Increasing It By Over 500K.

A hallmark of Low Code systems is their approachability. This alters the skill set required for a knowledge worker to be successful with these systems. Many people consider the formula capabilities in Microsoft Excel to be a type of Low Code approach, and would argue that anyone with Excel skills is a Low Code developer.

However you slice it, Low Code systems amplify the developer population extending it to a wide swath of knowledge workers. It’s not unreasonable to think that for every $10K generated by a Low Code company, there is 1 knowledge worker that is empowered to create something they would not otherwise have been. At $4.6B ARR, a 500K increase, or ~3%, to the developer population is not an unreasonable impact.

3.6.2: Low Code Companies Specialize By Focusing On Narrow Workloads.

We reviewed the offerings of 110 low code companies. We broke them into five general categories based upon the types of assets that are automatically generated.

As you can imagine, the demand (and associated ARR) for building applications and Web sites has attracted the most customers, VC raised, and startups.

Application Low Code Companies Are $2B ARR. The generation of Web and Mobile applications. Extremely competitive space. Most startups have little differentiation outside of how their model driven GUI presents options. Many vendors also provide hosting of the resulting application and generate substantial revenues from managing the application.

Business Workflow Low Code Companies Are $875M ARR But Growing At Only 10%. Workflow systems are lightweight integration PaaS offerings that enable rapid interconnection of disparate systems to implement business processes or to automate cross-system tasks. There is a significantly larger iPaaS market that focuses on corporate-scale systems integration not included within this segment. Growth is poor in this segment as while heterogeneity increases, the need for this type of business process automation appears in pockets throughout companies and has less bottoms-up demand from knowledge workers.

ChatBot, Internal App, And Voice Flow Companies Are Nascent Vendors That Unlock New Types Of Customer Experience. Internal apps are those that provide employee services, such as being able to implement a small app within Slack for employees to query how much vacation time they have built. These micro apps can then be extended into different mediums such as on your phone, within a chat window, or on a heads up display. The nascent nature of building these mini apps has collectively low revenues to show though VC interest has piled in big checks in the past couple years.

Data-Driven Low Code Systems Generate Digital Services And Apps From Existing Databases And Spreadsheets To Minimize The Time To Unlock Rich Sources Of Existing Data. These systems range from being rich spreadsheets which can be packaged as applications for end users to consume all the way to systems that convert existing databases into functioning applications. Remarkable growth and huge VC flowing into this segment as investors scramble to convert 100 million Microsoft Excel users into app developers.

Web Site Generators Make It Simple To Build And Launch Sites For Millions Of Small Groups and SMBs. Anybody remember Geocities from the 1990s? As the nature of what constitutes a Web site has evolved and the form factors change, new opportunities for Web Site generators have emerged. The market is large and dominated by a small number of vendors. As the form factors for Web Sites have slowed in recent years, the ability for startups to differentiate in this space are narrowing.

3.6.3: With Only $6.35B VC Raised Across 110 Companies Generating Over $4B ARR, Low Code Companies Have Demonstrated The Best Capital Efficiency Of Any Category In The Developer Landscape.

As the complexity of the development process rises and the cost to build custom software rises, there will be further interest and acceleration of Low Code platforms. These dynamics are inextricably intertwined.

3.6.4: Low Code Companies That Remove 20x Of The Architectural And Operational Concerns Of Developers Will Emerge As Major Platforms.

Of the 110 companies that we reviewed, many of them were simple abstractions that automated core portions of the data or GUI workflow for developers. These abstractions were opinionated and helped, but often times users of those systems reported modest, but not spectacular gains in productivity.

More importantly, given the opinionated nature of how low code systems work, the productivity gains during the application creation process are offset by the learning curve required for new developers to maintain the application. Yes, it may be simpler, but since it is opinionated, it has a learning curve as there is limited experiential carry over that can come from working with other programming languages and platforms.

I’m particularly excited by low code platforms that embrace a developer experience familiar to most developers and simple enough for non-developers to learn, while also eliminating 20x of the architectural and operational concerns developers typically face.

Only a couple companies in the review lived up to this expectation.

One is Kalix, which promises the ability to build scalable APIs and microservices without developers dealing with any operational details, learning SQL or database programming, or new programming languages. Kalix let’s developers use the language of their choice using simple procedural constructs to create any kind of logic they desire. Kalix automates the detection and saving of state into persistence engines using Reactive Principles, which allows the applications built by users to have global round trip write latency of under 20ms. Developers embrace their own tools, their own languages, their existing skills, and can create systems that scale up to 100K TPS. That is impressive.

3.7: Automation – Shipping Faster Through Progressive Delivery Automates Pipelines That Move Code From Design Through Release And Back Creating A Massive $3.5B ARR Industry Of Proprietary Providers.

Pipeline automate tedious and time consuming tasks which must be repeated frequently by developers or after code changes. Whether it is compiling, building artifacts, uploading assets, moving assets to new environments, testing, analyzing, or activating new pieces of code, the delivery of code into production is a complex workflow of steps.

As our understanding of how pipelines should operate has matured, the industry is integrating the core build, CI, CD, experimentation, configuration management, and GitOps capabilities into a single seamless platform that can move bits into and out of a production environment continuously, and increasingly without human intervention.

Progressive Delivery combines these solutions to provider the right pipeline experience for the right internal and external users.

Few standards exist across this spectrum and given the amount of software that is continuously being built, there have been significant startups and large vendors that have made a claim into some portion of the Progressive Delivery market. It’s fragmented and most of the solutions are proprietary. There is plenty of sideways acquisitions occurring where the largest vendors are scrambling to provide an all-in-one platform that controls the entire supply chain.

4.0: The Ironies Of Automation Create Upper Bounds To Developer Productivity Gains.

Does automation measurably improve productivity?

4.1: Waterbed Theory Of Systems Suggests Complexity Can Only Increase.

Larry Wall, the inventor of the Perl programming language observed that many systems, such as computer languages, all contain complexity. Any attempt to hide or abstract the complexity of such a system in one place will invariably cause the complexity to “pop up” elsewhere. It’s metaphorically related to a waterbed mattress which has a fixed amount of (non-compressible) water (complexity) which shifts around as pressure displaces one bump for another.

Applied to technology, this implies that as we solve difficult problems and make hard things simpler, that there are entirely new classes of hard problems that we struggle to solve. That Waterbed theory suggests that for every technological achievement we create, we are introducing new complexity in different areas of the lifecycle.

4.2: Does $1 Of Savings From DevOps Automation Create More Than $1 Of Complexity?

The Waterbed theory of systems implies that every $1 of savings from DevOps automation will create $1 of complexity elsewhere, since complexity cannot be compressed.

Over time, we use and depend upon software increases, which introduces additional categories of complexity, making the monetary (and opportunity) impact of complexity sizable and growing.

With software, the growth of complexity is inevitable. While complexity cannot be eliminated or compressed, it can be managed, contained, and tackled through new forms of innovative industrialization.

4.3: Automation Creates Expectations Among System Designers And In The Remaining Tasks Left To Humans, Leading To Ironies Of Human Involvement.

The Ironies of Automation is a classic paper from Lisanne Bainbridge, a cognitive psychologist at the University College London. She states:

The classic aim of automation is to replace human manual control, planning and problem solving by automatic devices and computers. However, even highly automated systems need human beings for supervision, adjustment, maintenance, and improvement. Therefore, one can draw the paradoxical conclusion that automated systems still are man-machine systems, for which both technical and human factors are important. The more advanced a control system is, so the more crucial may be the contribution of the human operator.

Lisanne Bainbridge

Essentially, the more sophisticated and capable automation becomes, then the gaps around it to keep it optimal grow commensurately and must be filled by humans. In many ways, automation moves the complexity goal posts from one location to another.

4.4: Automation Drives Productivity To A Ceiling Which Can Only Be Broken Through A New Wave of Software Industrialization.

Given a certain set of principles and paradigms, along with access to natural resources, our industry organizes itself around a software industrialization wave. Initially these waves lead to massive gains in productivity as humans leverage new natural resources to overcome the limitations experienced previously.

However, as we become more efficient at exploiting a natural resource, we mature into the wave of industrialization, and then the Ironies of Automation lead to smaller and smaller productivity gains.

5.0: Software Industrialization Waves Undertake 40 Year Fragmentation :: Consolidation Epochs Placing The Developer Industry At The Fragmentation End Of A 2nd Wave.

Industrialization is the transformation of an economy from predominantly agricultural to manufacturing.

Software Industrialization is the transformation of software system construction from predominantly manual to increasingly automated.

Industrialization and Software Industrialization go through waves.

5.1: Software Industrialization Waves Are Defined By How We Incorporate User Feedback.

For the software development industry, an industrialization wave is driven by the paradigm that is used to gather end user feedback for making improvements. As complexity increases, the paradigm allows for automation systems that incorporates feedback to make modifications quick(er) than without.

How developers and their teams philosophically approach gathering feedback ultimately drives a number of people, process and technology decisions, which in turn both facilitate and constrain the approach used by developers.

5.2: The 1st Wave Of Software Industrialization Driven By Waterfall Principles Lasted 40 Years And Created $50B Of Market Value.

The modern software development movement that we understand today began in the 1960s and ran through the turn of the millennium.

There was a small population of software engineers, access to compute equipment on which to build programs was limited and expensive, and software distribution was constrained by physical packaging.

These constraints were propagated by using Waterfall planning and organizational paradigms which measured iterations often in years.

The underlying compute wasn’t strong enough for systems to make inferences on their own, so humans made each connection manually, which further amplified the long iterative nature of software built using waterfall techniques.

Finally, the code of a system was considered intellectual property and was generally kept hidden with a restrictive license designed to commercially benefit the company that had originally authored the code.

5.2.1: Waves Go Through 40 Year Fragmentation :: Consolidation Cycles That Generate New Markets, Superstar Founders, And Global Employment Spurts.

This first wave of software industrialization lasted roughly 40 years, and through most of the last 15 or so years it experienced market consolidation among the biggest vendors: Oracle, Microsoft, and IBM.

This cycle makes logical sense - when a new productivity paradigm emerges, so will the innovation around the concept funded by a variety of startups that have businesses designed to optimize and exploit the paradigm. This eventually leads to a golden age where the industry has more businesses than it can absorb that are attempting to capitalize upon the paradigm shift. As the paradigm matures into a set of best practices, global software organizations that are adopting the paradigm look to implement the paradigm more cost effectively, creating demand for solutions that deliver more of the paradigm rather than siloed best-of-breed vendors. Eventually market dynamics kick in and a consolidation wave emerges where weaker vendors die or get merged into market leaders.

There were a number of acquisitions in the 90s that allowed the major vendors to consolidate their positions. Microsoft acquired Bruce Artwick Organization, Vermeer Technologies, Aspect Engineering, Dimension X, and Softway Systems; all small purchases but related to expanding their programming technologies.

Oracle waited until after the millennium ended before making most of its purchases to expand its developer platforms runtime including Tangosol and BEA Systems. BEA was once the preeminent Java platform and Oracle waited until was a fraction of itself to acquire the asset for $8.5B in 2007.

5.2.2: IBM Acquiring Rational For $2.1B In 2002 Commercially Defined The Largest 1st Wave Consolidation Transaction And Marked The End Of The 1st Wave.

In an epic fail of a transaction, IBM acquired Rational for $2.1B in 2002. Rational was the premiere publisher of all kinds of (mostly visual) software that helped development teams be more Waterfall in their nature. Of course, IBM didn’t know that agility was to emerge as a paradigm shift. Nor could IBM anticipate the emergence of cloud computing which would undermine their language and application server platform ambitions.

But shortly after the exorbitant purchase, the market altered course and the platform ambitions of Microsoft, IBM and Oracle were undermined.

5.3: We Are In The 2nd Wave Of Software Industrialization!! It’s a $50B ARR Market, 1350 Companies, And Growing 22% Annually. It’s Monstrous.

Software development has completed the first wave, and is currently experiencing the golden age of its second wave.

5.3.1: The Agile Manifesto And Release Of Git Shifted Software Industrialization Into A New Wave.

The Agile Manifesto was released by a group of luminaries (actually they thought of themselves as anarchists in rebellion to corporate policies).

In order to succeed in the new economy, to move aggressively into the era of e-business, e-commerce, and the web, companies have to rid themselves of their Dilbert manifestations of make-work and arcane policies.

Agile Manifesto Authors

The Agile Manifesto was born out of a desire to be more competitive and to overcome the complexity (bureaucracy) embedded within corporate structures (ie, the best practices encoded into the Waterfall way of software).

Later in the decade, Linus Torvalds released git version control. Linux was an open source project of large scope. Changes to the kernel were passed around as patches within emails and archives. This mode of interaction was easy to learn but slow and didn’t entirely support a fully distributed collaborative group. git enabled truly distributed collaboration, fast speed, and unlimited parallel branches. git’s major contribution was the ability for outsiders to participate without first being granted access to the version control system. git opened upon the potential for unsolicited contributions.

Unsolicited contributions + iterative agile practices were the tinder that unlocked pent up creative energy, transitioning that energy from corporates into the hands of the creator class: developers.

5.3.2: The Agile Wave Of Software Industrialization Has Created $500B Of Market Value That Spans Across Dozens Of Publicly Traded Companies.

The DevOps tooling, infrastructure and platform markets had to be reimagined to support distributed collaboration and agile practices. This caused the incumbent platforms with Microsoft, Oracle, and IBM to be disrupted and a new crop of super star companies emerge that capitalized upon the principles embodied by this new way of development.

Software development became a competitive endeavor to the point where most companies on the planet strive to leverage software as their differentiation. Cloud-based systems with user populations accepting mobile-first and SAAS architectures gave way to homogeneous environments that allowed developers to observe their end users in virtually real time dramatically dropping the time to feedback from months to hours.

5.3.3: The Developer-Led Landscape, A Reflection Of This 2nd Wave, Now Comprises 1350 Companies Generating $50B ARR That Have Created $500B Market Value.

While the collection of companies that dominated the 1st wave of adoption generated $50B, the 2nd wave of companies now collectively generate that in revenues every year. They have created at least $500M of new market value, and it’s probably closer to $1T.

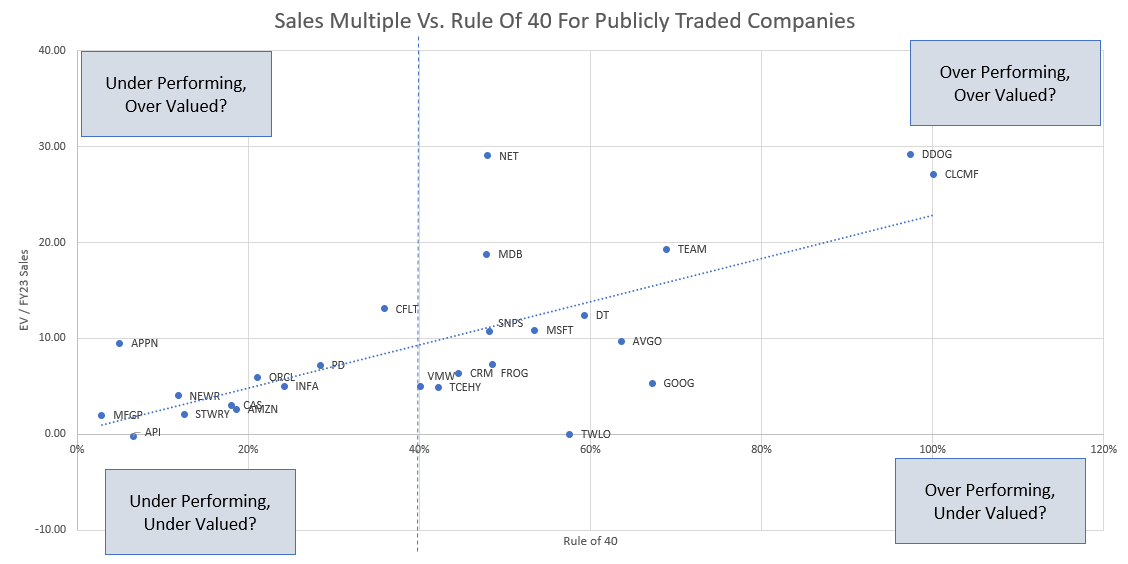

5.3.4: The Agile Wave Is Driven By 40 Publicly Traded Companies, More Than A 4X Increase Over The Waterfall Wave.

Developer-led companies are generally efficient. They deliver essential productivity, infrastructure, and platforms used by developers at global scale. With the number of developers that need these solutions in the 10s of millions and (hopefully) growing, the revenue scale that a successful new developer tool can obtain is significant. And since many developer platforms and tools are evaluated by developers themselves, many organizations can create a business model where the cost of customer acquisition for dev platforms is lower than non-dev platforms.

5.4: We Are Exiting The Fragmentation Epoch And Entering The Consolidation Epoch For The Agile Wave Establishing A “Peak DevOps” Moment.

Industrialization waves go through fragmentation / consolidation epochs.

For the past 15 years, we have been in a fragmentation epoch (or “expansion” if that better suits your sensibilities) for the Agile wave.

One point supporting this is the rate of growth for new commercial companies has continued to increase over the past 15 years, with nearly 200 new entrants emerging within the past year.

5.4.1: Version Control Vendors Are Best Positioned To Be Agile Wave Consolidators Due To Developer Concentration, Store Of Record For Strategic IP, And Runtime Platforms Historically Struggle To Shift Left.

Code, and the modifications made to it, are a strategic store of value. Version control systems and the vendors that produce them have leverage from storing their customers’ strategic IP, but also from visibility that occurs by observing the concentration of developers collaborating on the code through the version control system.

Additionally, massive runtime platforms like those controlled historically by Oracle, Software AG, Tibco, Informatica, and the hyperscalers have regularly failed at consolidating through a shift left. Attempts to add developer tooling and infrastructure as a core product line are met with half-hearted attempts, or usually, implementations tailored to the unique properties of the runtime making their DevOps platforms narrowly suited to dev teams that are using their runtimes.

5.4.2: Microsoft Is Platforming (err, Consolidating) From Code To Cloud; Though Atlassian, Gitlab And CI/CD Vendors Are Consolidating Developer Experience.

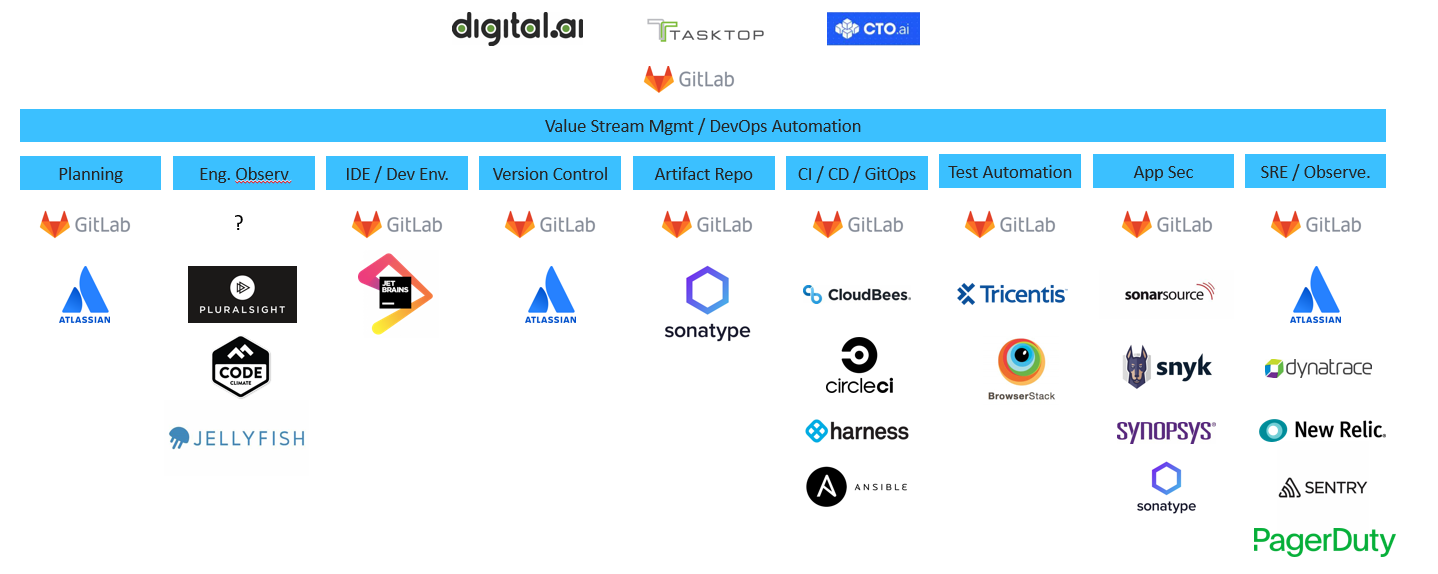

Consider this crude visual that maps where the largest developer workflow players fit within the landscape.

Only GitHub, IBM, and Hashicorp have product lines that span all three of the major categories in the developer landscape.

Microsoft has done the most platforming, with GitHub being the #1 version control system and approaching 100M collaborating developers, but also because they control the world’s most popular code editor in VS code, and the 2nd largest developer runtime in Azure. Further, Microsoft’s interconnections between VS Code, Azure, and GitHub have become so seamless that it’s difficult not to view their offering as a seamless whole.

IBM and Hashicorp have foot hold technologies within each of the major categories, and while they each have a desire to consolidate developer activity, their penetration is siloed and have had questionable results in being able to leverage developer activity within one silo to create network effects of developer activity within another.

Atlassian, as a version control vendor, and most of the CI/CD vendors such as Harness and Cloudbees, have attempted to leverage their large (but not dominant) positions to consolidate more of the developer workflow that extends to their left or right, but have increasingly been seen as offering niche platforms that cater to developers with certain specializations.

5.4.3: Gitlab: Consolidating By Building An Opinionated All-In-One Platform Of “Good Enough” DevOps Automation.

While Microsoft dominates by having different, dominant leaders in version control, text editing, and runtime platforms that each have extension tentacles that reach out across the DevOps spectrum, Gitlab is attempting to build a singular platform that extends to the far left and far right of the spectrum.

Each of the silos in the dev landscape has 2 top vendors that own collectively at least 60% of their micro-market. These vendors defined the paradigm of how their feature set should apply in an agile market, and that led to brand & community lock-in with developers that up-start companies will struggle to displace.

Look at the success of Gitlab, which trades off a platform that “does it all” with a heavily opinionated approach. While Gitlab’s total revenues are only $300M, a fraction of the total revenues of the specialist vendors listed for each niche, Gitlab poses a strong commoditization threat as they generally believe that they can offer most of the “shift left” dev tooling at a fraction of what competitors offer in exchange for getting a premium for the services that shift right, such as security and observability.

5.4.4: Mega Vendors With Platforming And Consolidation Power Make Selective Acquisitions To Broaden Their Capability Sets.

Consolidation happens around the vendors with strong balance sheets and potential platforming power to extend to opposite ends of the delivery lifecycle spectrum.

Most acquisitions happen in one of three forms:

Talent. Acquisitions of assets and talent with expertise in specialized areas to more aggressively build out expansionary capabilities of a platform. The IP assets may or may not be reused. The value of the business acquired is minimal. Arguably this is API Fortress acquired by Sauce Labs, Manifold acquired by Snyk, or Drone.io acquired by Harness.

Strategic. Acquisition of a strong performer or market leader in an emerging category to immediately and credibly enter into an adjacent market. The value of the business, IP, and talent are given equal measures and acquisition costs are significant. This would be Microsoft’s acquisition of GitHub, Salesforce’s acquisition of Slack, or Cisco’s acquisition of AppDynamics. Price justification is driven by expected cross-platform leverage or necessity to avoid impending doom.

Financial. Acquisitions of large revenue, low growth vendors that cannot afford to branch out of their existing narrow market lane. The acquirer is driven by monetizing profits from potential cost efficiencies and sales leverage from existing channels. The Chef and Puppet acquisitions by major vendors both were financially driven with consideration amounts for each less than 4 times their revenues even though both businesses were >$100M ARR businesses within the strategic configuration management space (what CD was before CD was).

5.5: As The Consolidation Epoch Unfolds, We Will Discover Companies That Are Sustainable And Those That Fail From Insufficient Capitalization.

A company in the developer-led landscape must cross $10M ARR in order to be a going concern that could theoretically be structured for profitability with enough customer-based funding to support maintenance, improvements, and limited marketing.

This was previously a $5M ARR metric, but the demanded pace of change for new software and its growing complexity has driven up the cost of maintenance by requiring larger sustaining R&D and customer support teams than what previously used to be required.

5.5.1: 935 Out Of 1350 Companies In The Developer-Led Landscape Generate Less Than $10M ARR And Face Significant Survival Risks.

Companies with less than $10M ARR must either find new VC financing, seek an acquisition, or grow through customer-based financing. With the markets tightening and inflation pressures increasing, VCs will be less willing to finance fringe, niche, or unproven developer-led businesses.

As the developer-led landscape has grown, the rate of new startups has increased dramatically, with (by my analysis) many of those new startups tackling narrower bands of problems than startups from a decade ago. Many of these startups will face questions as to whether the market they are targeting is large enough to warrant the risk for a VC-style investment.

Developer-led companies that are unable to find customer or VC-based financing will face serious risks to their survival.

5.5.2: Up To 500 Companies In The Developer-Led Landscape Could Face Bankruptcy Within The Next Two Years.

In the most active years, there are 40 acquisitions of developer-led businesses (PE, financial, strategic, and acquihires), 5 IPOs, and another 30 that achieve “potentially self-sustaining operations”. In other words, the “exit rate” for companies that have an outcome is (generously) 75 per year.

Additionally, in the past year there has been less than 75 fund raise events for the companies that generate less than $10M ARR.

Also, 150 companies each year are getting exits or new financing. And many of these metrics are curtailed in the current market downturn, higher inflation, and VC tightening.

Cumulatively, and again generously, this implies 3 years of events that enable companies to continue surviving for a total of 450. This implies that of the 935 companies under $10M ARR that do not achieve one of these survival events, 535 companies are at closure / destruction / bankruptcy / massive pivot needed risk!

Since most companies VC-finance themselves for 2 years, the clock is ticking.

5.6: Ironically, Agility Will Cause The Agility Wave To End.

The prime characteristics that define the Agile wave of software industrialization are improving cycle time (more agility) and tight feedback loops from end users (more agility). As the software team becomes increasingly more flexible and capable of responding to feedback, the state of the system achieves harmony.

But is it sustainable?

It’s incredibly challenging to build an organization that achieves this level of iterative harmony that lasts for a sustainable period. As systems grow in complexity and the end user feedback increases, it becomes increasingly difficult for a human to reason about the impact a change might have on the system.

Effectively, agile principles of continuous improvement can get us stuck on the feedback hamster wheel.

Or perhaps, more apropos, is the nature of automation as demonstrated by Lucile Ball in the chocolate factory. You can go faster, but can the humans keep up?

Miriam Posner, an assistant professor of Information Studies and Digital Humanities at UCLA has been studying the impact of Agile on organizations for many years. In her essay, “Agile and the Long Crisis of Software”, she notes from interviews:

Even as the team is pulled in multiple directions, it’s asked to move projects forward at an ever-accelerating pace. “Sprinting,” after all, is fast by definition. And indeed, the prospect of burnout loomed large for many of the tech workers I spoke to. “You’re trying to define what’s reasonable in that period of time,” said technical writer Sarah Moir. “And then run to the finish line and then do it again. And then on to that finish line, and on and on. That can be kind of exhausting if you’re committing 100 percent of your capacity.”

—Miriam Posner

And, as we have discussed previously, agility has brought forward other complexities including the richness of the ecosystem, the need for more rapid and continuous upskilling, a sense of heavier surveillance and observability across all developer related tasks, and a generally more involved process needed to ship software.

So what happens next?

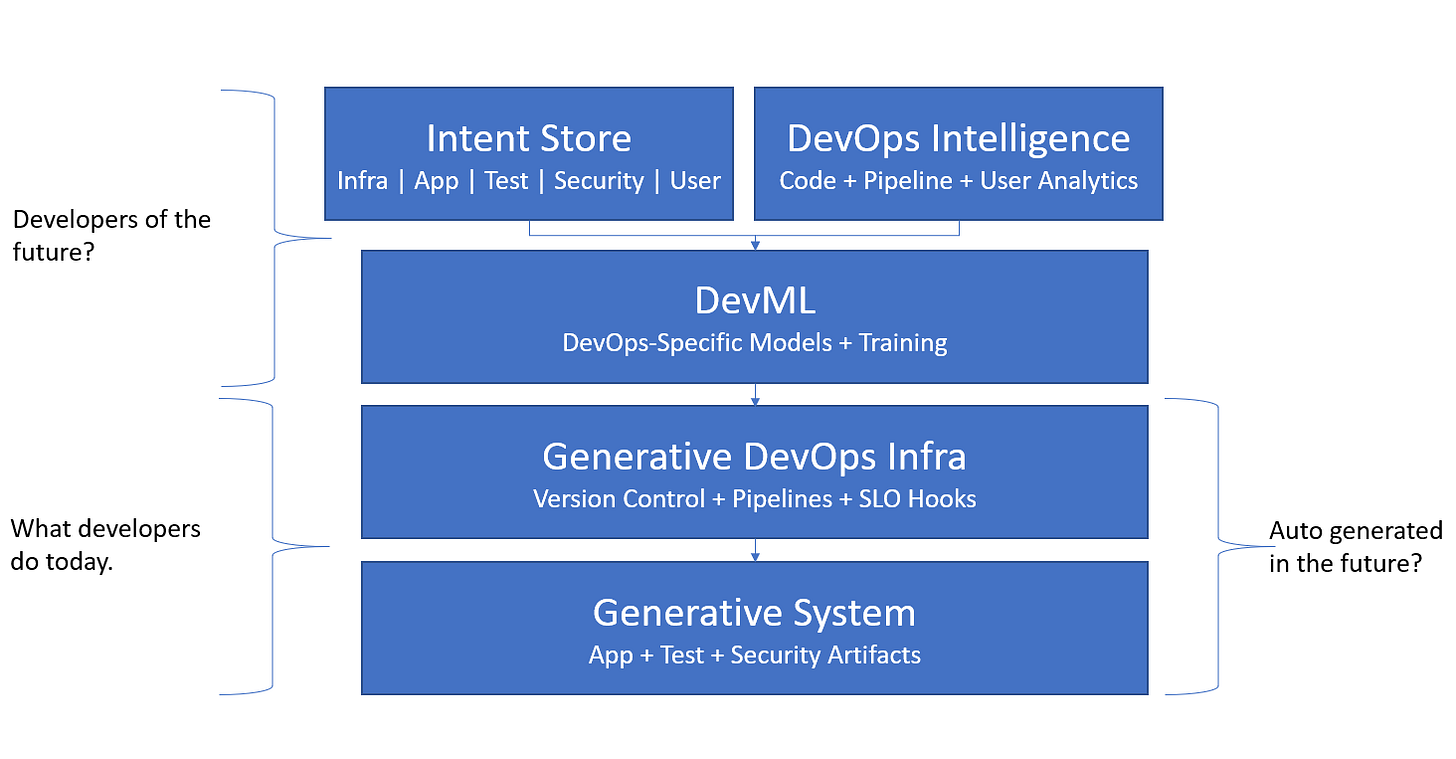

6.0: A Futuristic Third Wave Of Software Industrialization Would Be Defined By Robots, Data, and Instant Feedback.

Ultimately, the incremental increase of software talent and their limited wage increases create marginal improvements in our collective productivity and output in the creation and maintenance of new software systems.

6.1: Productivity Improvement Through Robotic Software Industrialization That Overcomes The Cumulative Complexity Of The Agile Wave Is The Most Viable Way To Reconcile The Demand :: Supply Gap.

Productivity that can overcome the cumulative complexity from the last 20 years is the most viable way to reconcile this demand :: supply gap.

We use tools to save time and effort on laborious tasks. Over time, innovation causes tools to gradually increase in sophistication. The evolution of this are robots, which are merely advanced tools which require smaller amounts of human input to complete a task.

These robots, whether physical or digital, require considerable human intervention in their design, production, installation, and maintenance.

Under what conditions might such a robotic evolution for the software development process take place?

Software tasks can be automated to produce reliable outputs.

Technological feasibility - speed, performance, and sophistication of robots.

The unit profit for automating a task is worthwhile.

Legal permission exists for robots to access data for making decisions.

Software stakeholders respond constructively to robotic participation.

6.2: Software Industrialization Leverages Data As A Resource To Transform Productivity And Outputs.

The Industrial Revolution was driven by socioeconomic, cultural, and technological changes that made it possible for society to substantially increase consumption of natural resources which made mass production possible.

Software Industrialization is driven by socioeconomic, cultural, and technological changes that make it possible for software teams to substantially increase the consumption of data which make mass production and hands-free maintenance of software systems possible.

The Industrial Revolution allowed workers to acquire new and distinctive skills. Instead of being craftsmen working with hand tools, they became machine operators subject to factory discipline. Society built a sense of mastery over natural resources.

With Software Industrialization workers will acquire new and distinctive skills. Instead of being coders working with IDEs, they will become model and machine learning artisans subject to system sustainability. Software teams will build a mastery over data rather than code.

I’m reminded of the 2017 talk by Jeff Dean at Google, and his remarkable comment that with ML, Google Translate went from 500K lines of stat-focused code to 500 lines of tensor flow code – pretty amazing. Is that the future of software development? The systems that will create real value will be those that can up-level developer productivity and not just shift complexity to somewhere else in the system.

— Radhika Malik, my very smart(er) partner at Dell Technologies Capital

6.3: Instant Feedback And Modifications Made Without Human Intervention Would Define The Principles For A Third Wave Of Software Industrialization.